Generative AI for Scientists: A Slightly Helpful, Wildly Overhyped, Energy Hog

Debunking some myths for the gen AI novice

Image: Robert Seymour, March of the Intellect

Image: Robert Seymour, March of the Intellect

I’ve been repeatedly asked to weigh in on how generative AI and its underlying architecture, large language models (LLMs)\(^1\) can help scientists be more productive.

\(1\) Sorry, I really hate acronyms, but “LLM” is used widely.

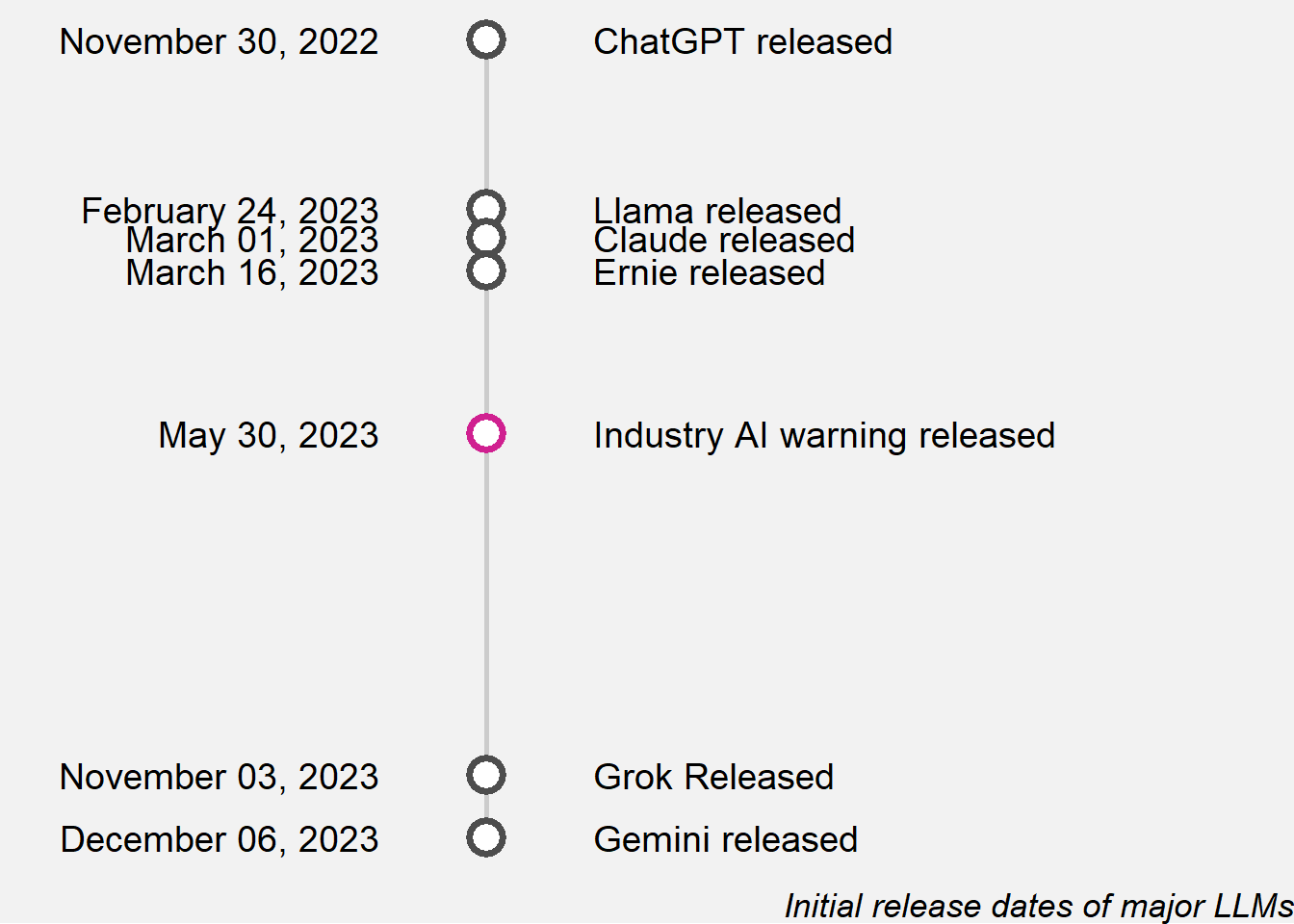

The very quick answer is that this is a rapidly changing landscape with tools appearing and disappearing. LLMs are an incredible achievement and have the potential to support many science-focused applications, but this technology is being vastly over-hyped and in some instances, their application is causing harm. It seems that many scientists do not understand how these models work, and I am concerned that they are not exercising healthy scientific skepticism about the actual utility of these tools.

1 Useful Applications of LLMs

The best application of these tools seems to be to help amateurs do simple tasks in domains they have little knowledge and produce products that are reasonably good, albeit still with an amateur flavor. One example is video editing. I am terrible at this and do not attempt anything beyond clipping off the beginnings and ends of long videos and yet, this task takes ages, partly because I don’t know software landscape for video editing. There are AI tools that will transcribe the video (imperfectly, but still, not bad) and a user can directly edit that transcript to remove sections. No one is winning awards for production of my videos, but still, it gets the job done.

As a programmer, I found generative AI tools, with careful prompt engineering, can often correctly answer very simple coding problems. They can explain small code snippets (I’m a hot mess with HTML and appreciate what chatGPT can tell me about it). If you have GitHub Copilot\(^2\) integrated into your coding IDE like RStudio, it’s pretty handy!

\(2\) Copilot A generative AI tool that is not Microsoft Copilot, a confusing situation since Microsoft owns both products.

This results in a small improvement in efficiency - maybe 5% (who knows?), but not some quantum leap. Beyond that, I’m pretty disappointed by the outputs from LLMs. These models famously “hallucinate”, producing confident sounding and completely incorrect answers. We are definitely not about to solve all the world’s problems with ChatGPT, or Llama, Gemini, or any of the other chat bots, despite Sam Altman’s excessively optimistic prognoses.

2 Why so limiting?

LLMs are text prediction models - nothing more, nothing less. They are built by ingesting large quantities of human-generated text-based data and using some rather sophisticated matrix algebra, non-linear transformations and calculus (truly, these models are a mathematical accomplishment). But, at the the end of the day, they produce content based on the most likely string of words for a given query (mathematicians, I know I’m oversimplifying things!! Don’t come at me!!!). They are built on human knowledge, human errors and human biases and hence can amplify all of those joys and sorrows of humanity. And that’s it. That’s all they do. In some ways, an LMM is combination glorified search engine/turbocharged auto-complete, except it needs the power of an entire nuclear reactor to do its job.

I know some scientists ask ChatGPT how to statistically analyze experiments. This is not a terrible starting point for statistical analysis, but given hallucinations, I hope those scientists are checking those results. One scientist boasted to me that he could upload data straight into chatGPT and it would analyze it for him. I’m not sure where to start with what is wrong with that process but most certainly, scientists need to know and supervise the statistical model used to analyze their results! This is science 101, my peeps.

As mentioned, LLMs are vastly over-hyped. Sam Altman infamously stated at one point that AI would run ‘experiments’ for us scientists while we slept, enabling leaps and bounds in scientific discovery (I cannot find the original tweet, one that was heavily lambasted by the academic research community but here is a statement from former Google CEO Eric Schmidt making the same claim). This idea seems dubious to me, mostly because generative AI cannot truly think; its advantage lies in aggregating information.

3 AGI and LLMs

And then there is the claim that these models might gain “AGI” or “artificial general intelligence”. There is not space here to debunk what a specious claim that is (also, see this excellent essay explaining why AI does make art), but the fact that this claim was first raised by AI companies as they monetized their products tells us all we need to know. Sam Altman and company were so deeply concerned about their products gaining AGI and causing human extinction, they decided to commercialize them? Okay, buddy. Nice PR stunt.

This seems a bit obvious to state, but ICYMI: generative AI is a very expensive technology, one that is currently without a sustainable business model. That is why these products are being so heavily promoted. AI companies cannot rely on venture capital funding forever; they eventually need customers and cash to survive. Just because AI companies are making wild claims about their technology does not mean anyone should believe it, no matter how many times AI marketing firms repeat those claims or how much advertising money they spend to broadcast those claims to the entire world at maximum volume.

I realize many of us are or were deeply impacted by a movie from our generation showing AI going rogue:

- 2001: A Space Odyssey

- The Terminator

- The Matrix

- M3gan

It’s important to remember that these are movies written by screen writers, not pointed warnings from people who understand the perils of specific technologies.\(^3\) While there are genuine messages about being cautious with deadly technologies, we do not have to wholly ascribe to the premise that robots will eventually ‘outsmart’ humans. The more likely scenario is that human will program a machine that may unintentionally or intentionally destroy other humans. This has of course, already been realized. Sad to say, but we temporarily lose control of technology all the time.

\(3\) Screenwriters are often concerned with telling a message that is directly relevant to viewers at the time it is written. The Matrix is a story about trans identity and not being forced into gender conformity. The Terminator is concerned with unchecked technological advances along with concerns about nuclear annihilation and the nature of free will versus destiny. Sure, they discuss the perils of technology, but that doesn’t mean they are warnings about sentient robots.

Overall, I am much less worried about AI becoming sentient and much more concerned about unintended but very real harms caused when AI is deployed by my fellow humans to address real world problems. There are many examples: generating misinformation, unreliable ‘predictive policing’, and amplifying bias. And none of these encompasses what is happening as AI slop overwhelms online and offline content (i.e. books).

4 The Statistician’s View

A few months ago, I attended the Joint Statistical Meetings (the “JSM” by those of us in the know) put on by the American Statistical Association in Portland, Oregon. The biggest topic of discussion among JSM attendees was AI (largely generative AI) and their underlying architecture, large language models. Are LLMs replacing us as statisticians? Are we all about to become obsolete and should we pivot our careers to production engineer (an actual suggestion I heard at the meetings)?

There was a range of views on the matter: AI researchers in particular seemed to view their craft as the inevitable dominant path for the foreseeable future. A keynote delivered by Jason Matheny of the Rand Corporation rattled off a long list of ways statisticians can and should contribute to the AI landscape. Other attendees remain highly skeptical. One staunch critic, Victor Solo of the University of New South Wales, called LLMs “over hyped” and “pre-scientific”. He gave a talk to a packed, standing room-only audience, objecting to the brute force methodology and over-reliance on empiricism of LLMs over domain knowledge and scientific principles. Many others were more circumspect: LLMs are here whether we like them or not, so how do we adapt? (This is largely my view, but with a healthy dose of skepticism added).

There were innumerable talks about using deep learning (another term for AI), but not specifically generative AI to improve the quality of statistical estimation. I don’t want to spend time chasing down one million references no one reading this blog is likely to follow up on, but this is a rich area of inquiry with many useful applications, especially for predictive modelling. In particular, deep learning has some great potential to assist medical clinicians with diagnosing medical conditions that rely on imaging like cancer detection.

5 Ethics and LMMs

Naturally, there were a number of ill advised proposed applications for AI presented at the JSM. Probably the most disturbing AI application discussed was using LLMs to replace clinicians for “underserved populations” (the words used by the academicians who led a workshop). These underserved populations may encompass whole regions (e.g. remote rural areas) or groups of people lacking health insurance and access to regular medical care due to their economic circumstances. A large portion of ‘underserved populations’ are poor communities that lack health insurance and may have spotty medical care history, making model based predictions less accurate (these models rely heavily on complete or nearly complete information to provide individualized predictions). AI clinicians for these underserved areas was pitched as “democratizing healthcare” - a surprising term to use given this was the exact language used by Elizabeth Holmes while she perpetuated a years-long fraud for her blood testing company Theranos.This is hardly the association anyone pitching a new technology would want.

As mentioned, this technology could assist actual trained clinicians and hence benefit their patients. But I am skeptical that anyone wants an AI doc bot. People want real services, not a cheap imitation. And is this how things should be? If you’re poor, you get a chat bot providing medical care, and the financially comfortable get real doctors, nurses and actual medical professionals?\(^4\)

\(4\) Wired magazine recently published an article on how wealthy inequality affects the personal care economy: “The Rich Can Afford Personal Care. The Rest Will Have to Make Do With AI.”

While health care LLMs exist, their level of deployment is unclear, and it seems likely that the FDA will intervene on this subject. Nevertheless, the breathless and well meaning enthusiasm for this from AI researchers was disturbing. These researchers appeared to not understand the problem they were proposing to solve nor did they have any investment in the final product. If the technology didn’t work and failed to help their target population, what did it matter to them? Would they even know?

6 Energy Usage and LLMs

This blog post is not intended to be “10 things I hate about gen AI”, but there is one more criticism that should be mentioned: the massive electricity use required for AI model training and deployment. Bloomberg recently documented how AI data centers are “wrecking havoc” (their words) on the world power grid. If the prospect of AI data centers using vast quantities of electricity feels hypothetical or remote, you may want to look into where your regional power comes from and what sort of AI data centers have or are considering tapping into it your local source. This is a very real threat facing my regional community in North Idaho/Eastern Washington as we try to survive uncertain and increasingly extreme weather. Several large data centers have relocated to the region where I live and used so much energy, Washington State is struggling to meet local demand. Meanwhile, the Pacific Northwest has experienced extreme climate events like the 2021 heat dome that sent temperatures soaring to 121°F in early summer and a polar vortex in January 2024 that sent temperature plunging to -40°F wind chill. Thousands of people died and regional energy usage spike as we all struggled to stay cool or stay warm during those events. For these extreme climate events, having reliable electricity was a matter of life or death. Is generative AI worth its massive energy debt?\(^5\)

\(5\) It has been suggested that it is alright to ignore these climate implications because AI will solve the climate crisis for us. Um sure, maybe. That’s technically possible under the framework that most actions have a non-zero probability associated with them, however small. But, there’s currently very thin evidence to support this view.

7 The Future

We are running out of data\(^6\) as the full extent of human-generated text is consumed by LLMs. It has also been shown that training models on LLM-generated text causes them to collapse. However, this is a rapidly advancing field that is likely to overcome these problems, not to mention that LLMs are becoming more efficient. Will these changes be enough to make generative AI truly useful and sustainable? Simon Willison (reputable programmer and LLM groker) reports that currently LLMs can be useful for a wide range of scientific applications, but learning how to use them currently requires a steep learning curve. Easing the barriers to adoption may well be the need advances that develop this year. Let’s all continue to keep on eye on this situation and evaluate it as it unfolds. Nature regularly publishes excellent articles that explore utility and reliability in the generative AI space. The only thing I ask is that you evaluate these methods with both a welcoming mindset and a healthy skepticism that is grounded in reality.

\(^6\) Even after pirating many copyrighted works!